Marius Masalar (Composer for Plague Inc and Rebel Inc) has written a fascinating blog about their process of writing music for Rebel Inc.

Learn more about Marius on his personal blog: https://mariusmasalar.me/

Buy the music for Plague Inc. and Rebel Inc. on Bandcamp or Steam

The Music of Rebel Inc.

A deep dive into what it means to write music for games

Game audio can feel like a thankless industry.

In our distracted world, the idea of listening to music for music’s sake is rare enough as it is. To expect people to notice and appreciate music when their attention is being drawn to enjoyable gameplay is just unrealistic.

Yet music is a critical aspect of establishing the gameplay experience, even if it’s mostly subliminal. It sets the mood, suggests pacing, and offers a sense of triumph in moments of success.

In Rebel Inc., a popular strategy game from the creators of Plague Inc., the gameplay challenges you to avoid combat as you attempt to balance the needs of separate factions.

What follows is a peek behind a curtain few folks ever experience: the process of writing music for games. Specifically, my process for writing the music of Rebel Inc.

I’ve been scoring games for nearly fifteen years now, and I want to offer a look at what that looks like. My hope is that it makes it easier to appreciate the tremendous effort and time that goes into bringing music to your favourite titles.

Writing Music for Games

One of the reasons I love writing music for games over, say, film or television, is that games are dynamic.

In a film, a given scene will play exactly the same way no matter how many times you watch it. Musically, that makes it predictable and consistent—much easier to score. In a game, a given sequence could unfold hundreds of different ways depending on the programming of the game and the choices of the player.

This element of player agency is what makes writing music for games so satisfying. You never know exactly where and how the emotional peaks and valleys will present themselves, yet you need to make sure the musical accompaniment always feels like it’s supporting the action. It’s a tremendous and thrilling task.

Writing music for combat is fun but generally straightforward. Writing music for conflict is complicated and rewarding, which made this a very cool project for me to work on.

I’ve written a lot of this kind of music over the years, most notably for Plague Inc., so when I was brought on to develop the music for Rebel Inc. I wanted to bring to bear everything I’d learned.

Establishing the Musical Language

Before a single note is written, I start with planning and research to establish the “language” of the score.

Unlike Plague, Rebel is unique in that it has a more specific setting: a fictional country in the Middle East, with gameplay inspired by the insurgency in Afghanistan. From the beginning, it was important that I remain aware of this setting and find ways to incorporate it sensitively.

Research into the history of the region helped me understand that its musical identity was difficult to decipher. With similarities, influences, and cross-pollination from many neighbouring countries, it’s tricky to pin down a single clear path to authenticity.

This was further complicated by my desire to temper the regional sounds with harmonies that would be appropriate for the mostly-Western ears encountering the music in-game. Many aspects of traditional eastern music sound “wrong” to our ears because they utilize unfamiliar harmonic language or rhythmic techniques. While there is objectively no such thing as “wrong” in music, subjectively I have to be mindful of audience (and my own) biases and write around them.

There’s also an element of sonic branding at work; I had previously established a certain musical “voice” for Ndemic Creations’ games through my work on Plague Inc. and it was important to me that the music of Rebel feel like it was cut from the same cloth.

An example of Rebel Inc.’s gameplay.

In the end, I drew from many nearby musical traditions, including instrumentation from Afghanistan, Iran, Northern India, and Egypt to incorporate the flavours of the region. These instruments were joined by Western sounds like a string orchestra, synthesizers, and guitars.

Each cue in the game also tends to have a “showcase” instrument—often one of the traditional regional instruments—though I generally processed them through a series of effects to give them a contemporary edge. For Concrete Mirage, it’s the plaintive electric cello; Elusive Prey features a series of breathy woodwind leads—and so on.

These showcase instruments (along with differences in rhythm, tempo, and intensity) allowed me to give each cue a distinct flavour to match the stage it’s intended for.

Getting Started

Early on in his scoring process, Hans Zimmer—one of the most famous working film composers—is known for having a conversation with the creative team about the film and then writing a long, exploratory sketch (you can hear one such sketch on the soundtrack for Man of Steel). This sketch comes together before he’s seen much of the film or its concept art, yet it tends to contain the seeds that grow into the final score.

I’ve adopted the core of this process too. In many cases, I’m brought in before the game is in a playable state, so I often have very little in terms of finished visuals to draw inspiration from. Instead, I speak to the developers and try to understand the concept through discussion. From there, I develop a sketch that’s used as the basis for exploring the musical language of the project.

This sketch is unpolished but indicative, and I will go back and forth a couple of times with the developers until we both feel I’ve hit upon the right direction.

In September of 2018, a few days after our usual exploratory Skype call, I sent in the following sketch:

Though it’s thin and rough, it already contains many elements that will be familiar to those of you who’ve played Rebel Inc. and experienced its score in context.

Not long after, I was given a playable build of the game that I dug into to understand the pacing, mood, and gameplay beats that players would go through. Between that and further feedback, I was able to refine that initial piece of music and get it to the point where I was happy with how it contributed to the game.

Collaborative Process

As a composer, my job is to write music in service of the game, not my own ego. Sometimes, an idea I think is musically valuable gets vetoed because it doesn’t work in context.

Every so often though, one of these ideas makes it into the game anyway. In Rebel Inc.’s main gameplay cue, Emerald Dawn, there is a moment at 1:27 where a heavily processed guitar plays a descending duo of short trills that don’t quite fit into the piece’s musical key.

Initially, the team suggested I remove this element as it was noticeably dissonant. I pushed back and argued that it was an important, calculated use of dissonance.

Why? Tension.

Establishing a mood of conflict requires a delicate balance between harmony and dissonance—an ebb and flow; otherwise, the music feels too flat, boring, and ambient. It becomes nothing but wallpaper. In the end, that element was allowed in and represents one of the more distinctive moments in the cue.

This is a key part of what makes working as a composer rewarding: it’s a collaborative process. You become a trusted creative voice and the conversation elevates both the music and the project it’s attached to.

Writing Music

Time to get geeky.

It’s all well and good for me to say that I write music, but I recognize that for many people that statement is inscrutable. What does “write music” mean these days? Is he sketching notation on paper and handing it to musicians to perform? Is he assembling loops in GarageBand?

The answer to both questions is no, and to explain what the process actually looks like, you have to understand a bit more about how modern music production works.

Broadly speaking, I work in Logic Pro X on an iMac. Sitting on my desk in front of it is a MIDI keyboard: a device that looks like a piano and sends data to my computer. MIDI is the language of that data, and it includes information like what keys I press, how much force I use, how long I hold them down for, and more.

With a few exceptions, everything you hear is played in live by yours truly on that keyboard note by note, instrument by instrument, section by section.

This is the MIDI keyboard I use, in case anyone is curious. It’s a Nektar Panorama P6.

There are, sadly, no live musicians involved in the recording of any of my pieces. Budgets rarely allow for it, so for most composers, the days of writing notation and going to a recording studio are a distant memory.

Back at my computer, the keyboard itself makes no sound. Instead, it sends those MIDI signals to Logic, where I employ virtual instruments to produce the sounds you hear. Virtual instruments are either synthesizers programmed to replicate real instruments, or they’re sample libraries.

You can think of sample libraries as gigantic bundles of sound recordings programmed to respond to MIDI information. They are generally immense, difficult to produce, and extremely expensive (if you thought photography was an expensive hobby…) For instance, a sample library of a piano might contain the following:

- A recording of each note on the piano, held until the sound stops ringing

- A recording of each note played very briefly to capture the sound of the “release” of a note when you lift your finger off the key

- Each note struck at several different intensities—held and short—to capture the changes in timbre (the tonal quality of a sound that distinguishes it from other sounds) that occur. A note sounds very different when it’s played forcefully vs gently

- Several repeated performances of each note (at each length and intensity!) so that if you perform the same note repeatedly in a piece, it doesn’t sound fake (we call this the “machine-gun effect” because playing the same exact recording over and over sounds mechanical)

- That entire set recorded again, this time with one or more of the pedals held down

- Separate recordings of the pedal sound so the software can layer it in to give a realistic sense of the mechanical sounds of the piano in the piece

- All of the above captured from at least two microphone positions to offer more control over the sound in the mix

…As you can probably guess, this results in a gigantic amount of audio data, especially since sample libraries are recorded in high resolution for maximum processing leeway in the mix. A single modern piano sample library can easily take up 100GB or more disk space, and—in the right hands—its output is virtually indistinguishable from a real recording to all but the most discerning of ears.

This sample data is wrangled by a sophisticated piece of software that maps the right note, intensity, etc. to the keys you press and intelligently plays back the right sounds depending on the MIDI signals it receives.

That’s hundreds of thousands of samples, many of which have to be loaded into RAM to enable real-time performance, plus a CPU-intensive software instrument to make it all work (I haven’t even touched on the deeper features of this part)—all living within Logic as a plugin.

And that’s just a single instrument in the arrangement. Most of the Rebel Inc. cues have 50 or so instrument tracks.

In fairness, not every instrument is that difficult to sample; most have a more limited note range than a piano, and a few have no differences in timbre because they can only play a single “loudness”. Still, it’s no surprise that this kind of music production is one of the most strenuous activities you can do with a modern computer.

Many working composers have had to rely on networked farms of desktop towers to handle their biggest projects, and it’s only recently that they’ve been able to contemplate running entire sessions on a single machine.

The Technical Details

It sounds a little bewildering when you lay out all the moving parts, but I want to keep digging a bit deeper to showcase a few of the small details of execution.

To do so, I’m going to unpack the latest cue I wrote for Rebel Inc.: the haunting Lethal Beauty.

Let’s start with the big picture. This is what the session looks like in Logic:

A birds-eye view of the Logic Pro X project for Lethal Beauty.

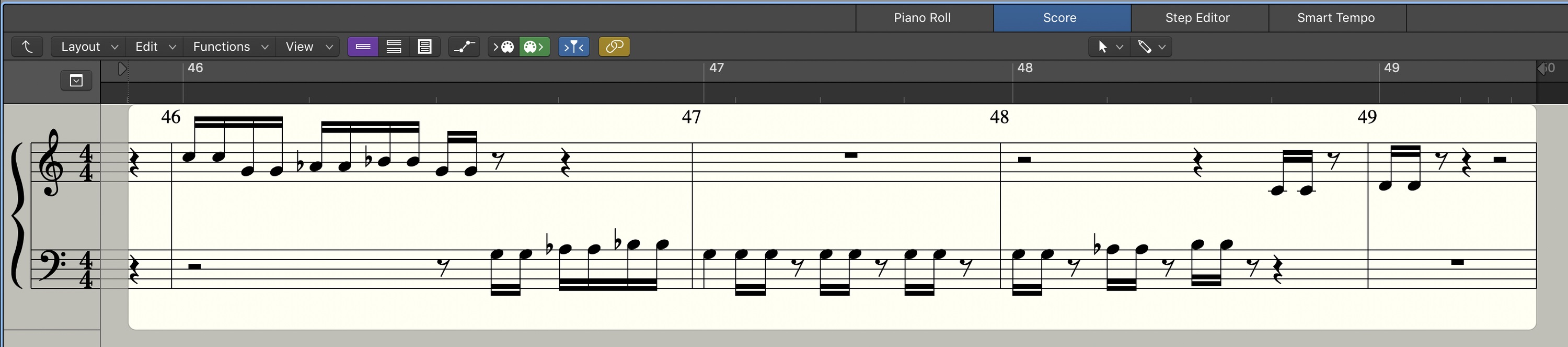

Each of those green blocks is called a “region”, and it contains a bunch of MIDI data. This data includes the notes I played, how hard and for how long I played each one, as well as other information that’s mapped to things like changes in timbre while the note is held.

For instance, a woodwind instrument can get louder and softer over the course of a single note depending on how much air the performer uses. This is an effect I have to replicate manually using a separate wheel on the keyboard that I’ve assigned to “breath” while I’m playing the notes. Otherwise, the loudness and timbre stay flat and the performance sounds fake, no matter how good the sample library is.

Each green block, or “region”, contains MIDI data that can be viewed in various ways.

What separates good composers from mediocre ones is this sensitivity to each instrument’s mechanics and idiomatic performance quirks. You have to understand how each instrument works in the real world to be able to convincingly replicate it with a virtual version.

In the composer community, one of the ways we spot virtual instrument performances is by noticing things that would be impossible for a live musician to accomplish: long phrases that don’t leave room for a brass player to breathe, for example, or performing multiple simultaneous notes on an instrument that can only play one note at a time.

Lethal Beauty follows the same core instrumentation as the rest of the Rebel Inc. soundtrack: there is an orchestral string section, an ensemble of regional instruments, a set of atmospheric elements programmed in various synthesizers, a lot of percussion (both organic and synthesized), and a pair of heavily processed guitars.

One of the first things we hear is a thrumming percussive hit, performed on a large drum and run through a delay effect to enhance the spaciousness:

This quickly gives way to the first statement of the cue’s melody, played on a Turkish instrument called the Tambur:

This melody is echoed quietly by the Iranian Santur, a hammered dulcimer instrument that takes a more prominent role by 0:41:

This is also where the Indian percussion becomes more evident. I performed this pattern on an ensemble of six instruments: the Dholak, Kanjira, Ghatam, Mridangam, Pakhawaj, and Tabla:

There are several other percussion elements floating around subtly, including some synthesized metallic elements that add a bit of high-frequency interest:

At 0:51, another of the regional instruments makes an appearance: the Oud. This lute-like stringed instrument is common across the Middle East, particularly in Egypt, Syria, Iraq, and Lebanon. It almost sounds European, and I’ve used a delay effect on it to provide some colourful echo to the sound:

Periodically, there’s a trembling crescendo performed on an instrument called the Quanun. This is another zither-like instrument, thought to have originated in Ancient Greece, and its scratchy, nervous trills provide a useful accent:

You may have also noticed the sweeps of wind-like noise. This is a common transition technique in modern pop music, but I wasn’t satisfied with the usual pre-recorded whooshes that are often used. I wanted the ability to control the crescendo precisely (as at the very beginning, 0:03, for example where it seems to quickly rise to a peak and then gradually taper off).

To accomplish this, I programmed a simple patch in one of Logic’s stock synthesizers:

Retro Synth is an under-appreciate tool in Logic’s amazing arsenal of stock instruments.

This patch has a noise source with a low-pass filter applied. This filter cuts out high frequencies, allowing only low frequencies to pass through—hence the name. By changing the frequency of the cutoff point, you get that classic sweeping sound. I programmed the synth so I could control that frequency using my keyboard’s modulation wheel, and performed each sweep by hand.

The synth is also run through one of my favourite algorithmic reverberation effect plugins that specializes in producing extremely ambient reverb:

Valhalla DSP can always be relied upon to produce top-notch plugins that are affordable and easy to use.

Other synthesized elements include subtle atmospheric pads:

As well as richer, more textured ones:

Even here, the basic synthesizer sound is run through a number of effect plugins, including a set of tools in Reaktor that allow me to modulate the effects over time in interesting ways:

Molekular is what’s called a Reaktor “ensemble”; you can think of Reaktor as Minecraft for music tools.

Traditional instrumentation makes an important appearance at 1:48, with an orchestral string section providing a classical-sounding segment before the cue’s climax:

This segment is set up as a sort of call-and-response, with each section of the string orchestra performing a similar phrase before handing it off to the next:

You can also choose to view a MIDI region as traditional musical notation, like this.

The end of the climax features another of those moments of calculated tension that I mentioned earlier.

The impact at 2:18 has most of the instruments playing a normal-sounding major third, but the deep bass hit is playing a dissonant low C note, throwing the entire chord off-balance just when we expect to get a satisfying resolution. The strings then slowly resolve back to a pleasant chord to end the phrase.

Looping

Perhaps the trickiest part of this work, musically speaking, is finding a way to make the music’s loop point feel as seamless as possible.

Ideally, you want each piece of music to be long enough that it doesn’t get grating after many loops, while also being short enough to keep file sizes reasonable. Rebel Inc.’s cues are generally about 3 minutes long, but in-game, of course, they loop for as long as your session lasts.

My goal is to make that loop point as invisible as I can so that the end of the piece feels like it leads into the beginning. This is easier said than done as it has both a musical component and a technical one.

The musical side requires structuring the piece in such a way as to have the ending section transition naturally into the beginning. Sometimes, I end up creating the loop and then moving the start point of the piece somewhere later than I initially intended because it helps hide the loop point better.

The technical side is a matter of establishing—to the millisecond—where the perfect looping moment is, then cutting the audio off at that point and pasting the reverb tail over the beginning so there’s no evidence of there ever having been a divide.

Though it wasn’t relevant for Rebel Inc., I should also mention that for many games, each piece of music is further divided into separate recordings for groups of instruments. Those separate recordings layered together make up the full piece, but in-game they’re usually programmed to fade in and out in response to certain events. More percussion when things get tense, for example.

Accomplishing this is an even more complex feat that’s beyond the scope of this piece as the technique was not used for Rebel Inc.’s music.

Bringing it All Together

Once I have the notes in and have structured the loop, I’m still only part of the way through the process.

Each instrument track usually benefits from several core processing tools like equalization (EQ), compression, and reverb to help situate them in the mix and ensure that everything sounds cohesive. Consider that for those 50 instrument tracks, each might host a sample library recorded using completely different equipment in a completely different space. In order for the mix to be convincing, I have to use those processing tools to match the ambience and make everything sound like it was recorded together.

I also have to be mindful of instrument placement—things have to be panned (moved laterally), pulled forward, pushed back—so that it doesn’t sound like everything is coming from a bizarre totem pole of musicians right in front of you.

Then I have to make sure the mix sounds good not only on excellent speakers and headphones but also—and especially—on terrible phone speakers. It’s a mobile game first, after all, so I have to be mindful of how the majority of players will be experiencing the music.

(Unless they buy the soundtrack, of course!)

Once each track is properly processed, I also run the entire mix through a few key plugins to polish things off. The most important are a mix buss compressor that “glues” everything together and adds a bit of grit:

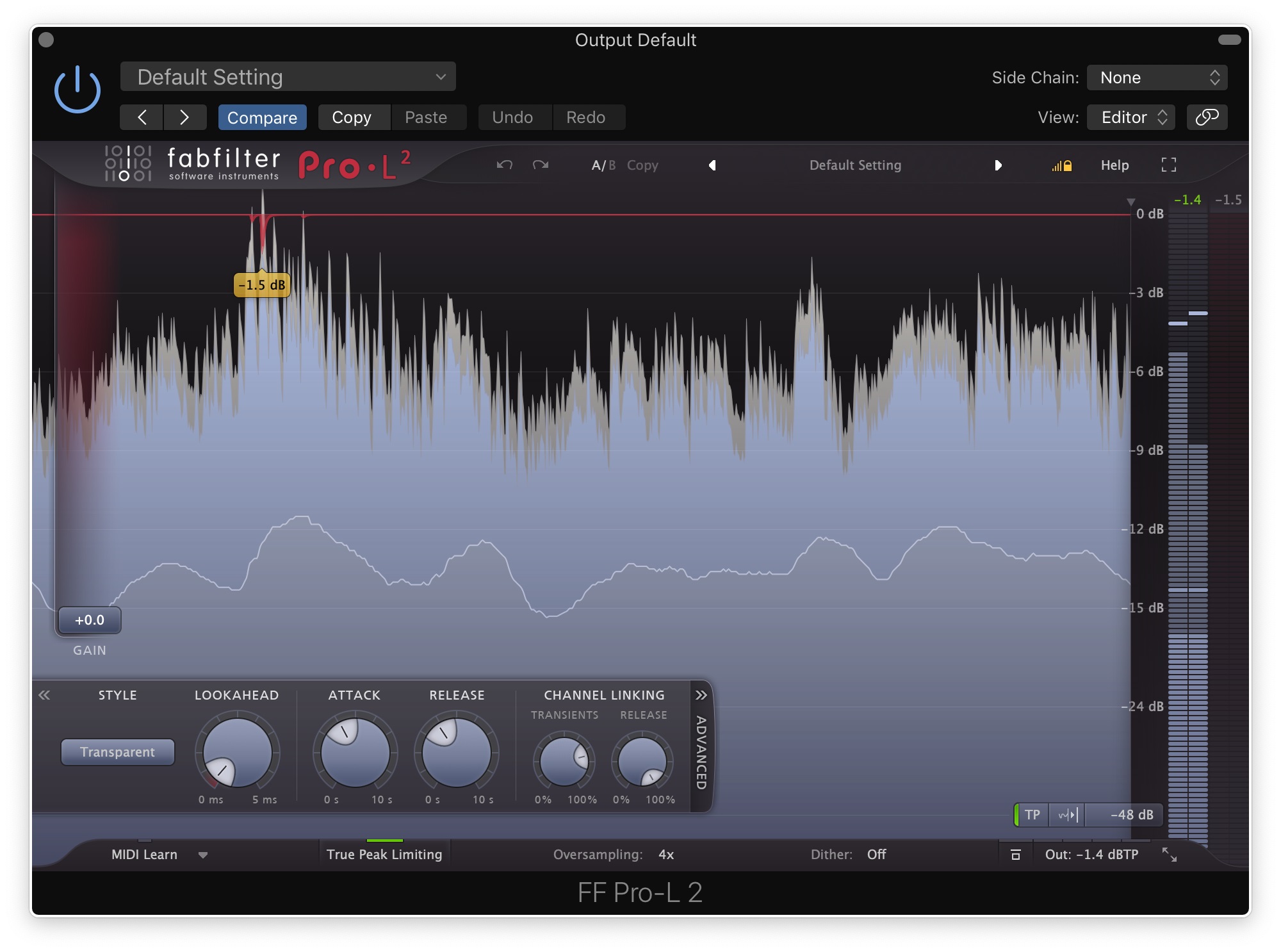

Followed by a limiter plugin that ensures the mix is nice and loud, without ever distorting:

After all of that, I can finally export the cue, convert it into the various delivery formats required to keep file sizes reasonable for both mobile and desktop versions of the game, and send it for feedback.

Once the team is happy and I’m happy, the music gets implemented into the game and generally goes out to the beta stream first for player feedback before inclusion in the public version of the game.

Conclusion

If you’ve stuck with me this far, you now have a much better understanding of how the music of Rebel Inc. came together, as well as—I hope—a sense of what modern music production entails.

I’m excited to finally share this kind of behind-the-scenes look. It’s partly because I’m proud of the work, but it’s also my attempt to remind you that every piece of music you listen to has likely been agonized over, note by note, in ways that you might not have imagined.

Understanding the scope of this effort through my own music work has made me more appreciative of all music. I’m no longer comfortable criticizing certain genres because I’ve spent enough time writing enough styles of music to understand that each presents its own significant challenges.

If you want to support the music artists you love, do me a favour and buy their albums/soundtracks/etc. directly. Not only do you get a higher-quality copy to listen to, but we make so little money from streaming that it’s basically worth it only as a marketing tactic to get our music in front of more people.

A single direct sale can be worth literally thousands of streams.

The Rebel Inc. soundtrack is available on BandCamp and on Steam.

The Plague Inc. soundtrack is available on BandCamp and on Steam.

You can find more of my music on BandCamp and SoundCloud.